Introduction to Generative Artificial Intelligence

Generative artificial intelligence (AI) represents a branch of artificial intelligence focused on creating new content, such as text, images, music, and more. Unlike traditional AI systems that operate based on predefined rules or datasets, generative AI leverages advanced algorithms and models to generate outputs that mimic human creativity.

Defining Artificial Intelligence and Generative AI

Artificial intelligence (AI) is a broad field encompassing various techniques and technologies aimed at creating systems capable of performing tasks that typically require human intelligence. Generative AI, a subset of AI, specifically involves models that can produce new content. These models learn patterns from existing data and use this knowledge to generate novel outputs.

Historical Context of Generative AI

The roots of generative AI can be traced back to the early experiments in machine learning and neural networks. Initial models were limited in scope and capability, often producing rudimentary results. Over the decades, advancements in computational power, algorithm design, and data availability have significantly enhanced the potential and performance of generative AI models.

Common Examples of Early Generative AI

Early examples of generative AI include simple text generators and basic image synthesis programs. These early models relied on fundamental statistical methods and were limited by the computational resources and datasets available at the time. Despite their limitations, they laid the groundwork for the sophisticated generative AI systems we see today.

The Emergence of ChatGPT and GPT-4

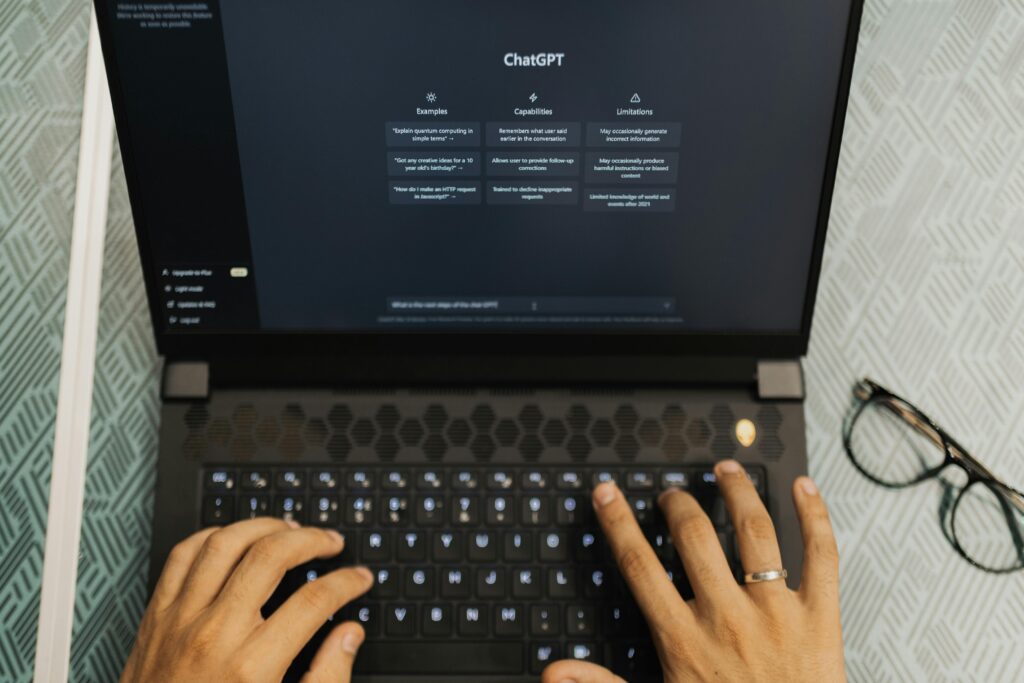

The development of ChatGPT, powered by the GPT-4 architecture, marked a significant milestone in the evolution of generative AI. Building upon its predecessors, ChatGPT introduced enhanced capabilities in natural language understanding and generation, setting new standards for conversational AI systems.

Capabilities of ChatGPT

ChatGPT is capable of generating coherent and contextually relevant text based on input prompts. It can engage in detailed conversations, provide explanations, compose essays, and even create poetry. Its ability to understand and generate human-like text has made it a versatile tool in various applications, from customer service to content creation.

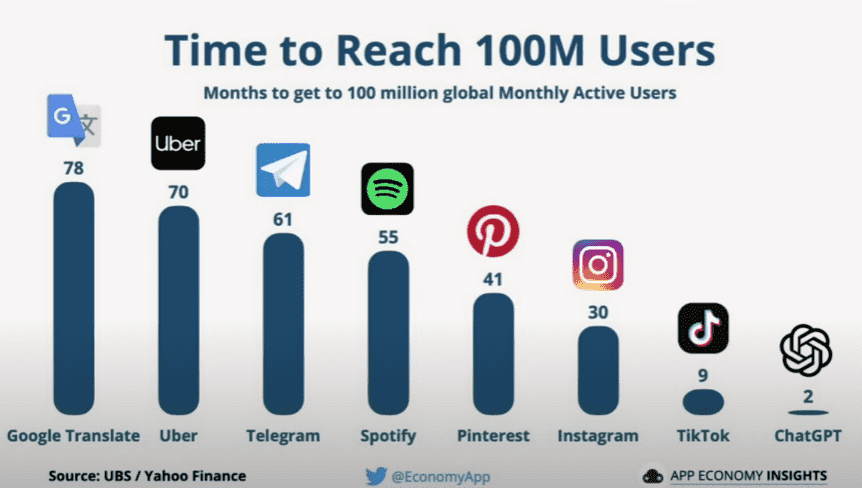

Popularity and User Adoption

The adoption of ChatGPT has been widespread, with users appreciating its ability to assist in diverse tasks. Businesses, educators, researchers, and individuals have integrated ChatGPT into their workflows to enhance productivity, creativity, and engagement. Its user-friendly interface and reliable performance have contributed to its growing popularity.

The Technology Behind ChatGPT

The underlying technology of ChatGPT involves deep learning techniques, particularly those related to natural language processing (NLP). At the core of ChatGPT is the Generative Pre-trained Transformer (GPT) architecture, which employs transformer networks to process and generate text based on vast amounts of training data.

Understanding Language Modelling

Language modelling involves predicting the next word in a sequence based on the preceding words. This task requires a deep understanding of syntax, semantics, and context. Language models, such as GPT, are trained to capture these linguistic nuances, enabling them to generate text that is grammatically correct and contextually appropriate.

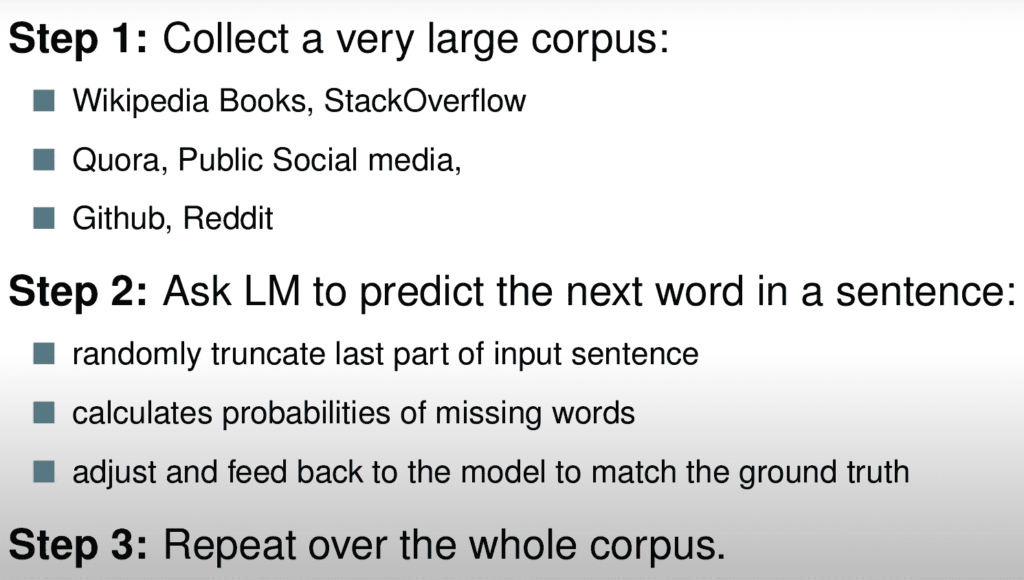

Building a Language Model

Building a language model involves several steps, including data collection, preprocessing, model architecture design, training, and evaluation. Large datasets comprising diverse text sources are used to train the model, ensuring it can handle a wide range of topics and language styles.

The Role of Neural Networks

Neural networks, particularly deep neural networks, play a crucial role in language modelling. These networks consist of multiple layers of interconnected nodes that process input data and learn complex patterns. In the context of ChatGPT, neural networks enable the model to understand and generate human-like text by capturing intricate linguistic patterns.

Training Neural Networks for Language Modelling

Training neural networks for language modelling involves exposing the model to extensive datasets and iteratively adjusting the model parameters to minimize prediction errors. This process, known as backpropagation, allows the model to learn from its mistakes and improve its performance over time.

The Importance of Large Datasets

Large datasets are essential for training effective language models. They provide the model with a diverse range of examples, allowing it to learn various linguistic structures, vocabulary, and context. The quality and quantity of training data directly impact the model’s ability to generate accurate and contextually relevant text.

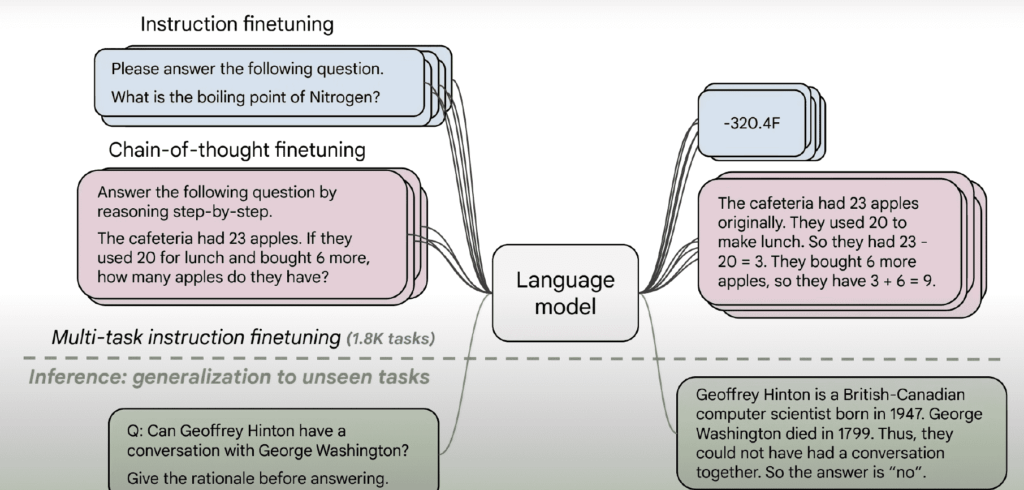

The Concept of Pre-Training

Pre-training involves training a model on a large corpus of text data to learn general language patterns. This pre-trained model can then be fine-tuned on specific tasks or domains, enhancing its performance in targeted applications. Pre-training is a crucial step in developing robust and versatile language models like ChatGPT.

Fine-Tuning for Specific Tasks

Fine-tuning involves further training a pre-trained model on a specialized dataset tailored to a particular task or domain. This process allows the model to adapt its general language understanding to the nuances of specific applications, improving its accuracy and relevance in those contexts.

The Impact of Model Size on Performance

The size of a language model, determined by the number of parameters, significantly influences its performance. Larger models, like GPT-4, can capture more complex patterns and generate higher-quality text. However, increasing model size also poses challenges related to computational resources and training time.

Comparing Model Parameters Over Time

Over time, advancements in model architecture and training techniques have led to an increase in the number of parameters in language models. Comparing these parameters across different generations of models highlights the continuous improvement in performance and capabilities. The progression from GPT-1 to GPT-4 exemplifies this trend.

The Relationship Between Model Size and Text Input

The relationship between model size and text input is complex. Larger models tend to generate more accurate and coherent text, but they also require more computational resources and time for training and inference. Balancing model size with practical considerations is a key aspect of developing effective language models.

Future Prospects and Ethical Considerations

The future of generative AI holds immense potential for innovation and application across various fields. However, it also raises ethical considerations related to bias, misuse, and the impact on employment. Addressing these challenges requires careful attention to ethical guidelines, transparency, and the responsible deployment of AI technologies.

Conclusion and Key Takeaways

Generative AI, exemplified by ChatGPT and the GPT-4 architecture, represents a significant advancement in the field of artificial intelligence. Understanding the underlying technology, training processes, and ethical implications is crucial for leveraging these models effectively and responsibly. As the technology continues to evolve, it promises to unlock new possibilities and transform various aspects of our lives.